Fielding

2017 by Till Bovermann

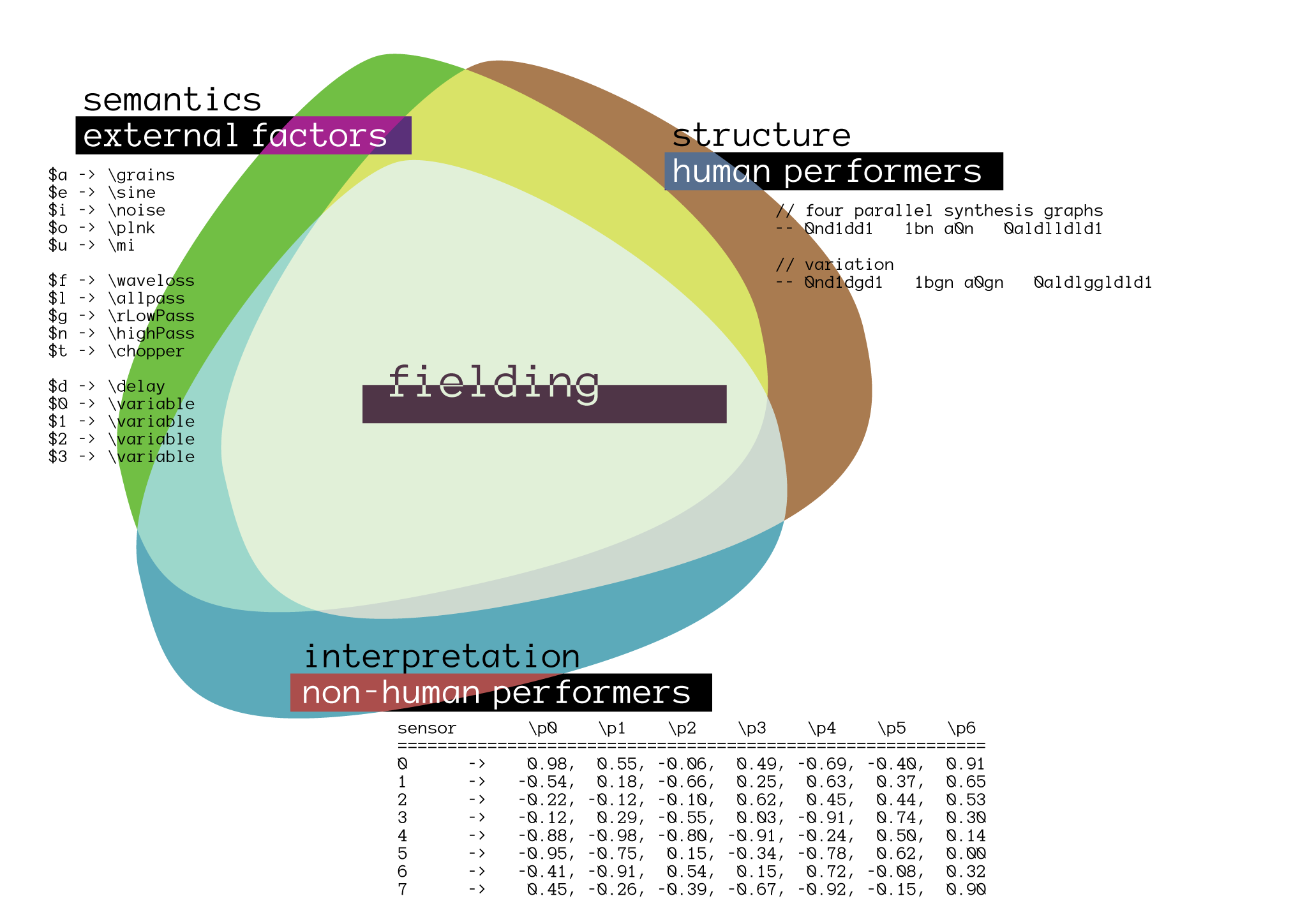

Fielding invites (non-)human beings to shape and form an artificial soundscape. Fielding consists of semi-autonomous Nodes that are wirelessly interconnected and share a common acoustic basis.

Fielding conceptual

Fielding conceptual

Non-human performers influence Fielding by means of environmental sensors (temperature, moisture, light) built into the nodes. The sensed values determine the current states of parameters implemented into the node’s sound sources and filters. Their on the parameters is determined by external factors (see below).

Human performers shape Fieldings sonic qualities by forming sentences of a sonic alphabet. This structural information is send to all nodes, whereas the sensory information coming from the plants remains local.

Fielding’s structural foundation is based on an alphabet, a finite set of sonic elements.

Each element is represented by a single character of the set [ e, a, u, o, i, t, f, l, g, n, d, 0, 1, 2, 3 ].

// four parallel synthesis graphs

-- 0nd1dd1 1bn a0n 0aldlldld1

// variation

-- 0nd1dgd1 1bgn a0gn 0aldlggldld1

In this configuration, the digits 0, 1, 2, and 3 are variables into which sound can be fed and read from.

d stands for a short, time-dynamic delay-line.

The concrete implementation of the other sonic elements and hence the aesthetic possibility space of a performance is determined by external factors such as the date of performance, the moon phase and other planetary configurations. Their current configuration determines which sound patterns are mapped to the characters. However, vocals always represent sound sources, whereas consonants are always filters.

// example configuration

$a -> \grains $f -> \waveloss $d -> \delay

$e -> \sine $l -> \allpass $0 -> \variable

$i -> \noise $g -> \rLowPass $1 -> \variable

$o -> \plnk $n -> \highPass $2 -> \variable

$u -> \mi $t -> \chopper $3 -> \variable

Similarly, the amount of influence of the node’s sensor elements on the sounds is set.

Alongside lots of dedication, Fielding is build on the shoulders of such great projects as Steno, bela, BeagleBooard, and SuperCollider. It was developed by Till Bovermann in 2017.

- Affiliation:

- music residency at the Institut für Elektronische Musik und Akustik - IEM in Graz/Austria, and an artist residency at iii, the Hague.