While my background is in computer science and sonification, I find myself increasingly working on and with sound art, interaction design and music making. Over recent years, a multitude of open ends appeared in my work and artistic practice which I intend to thread together within the IEM music residency. One major part of my residence is dedicated on what David Pirrò (unconsciously?) coined as “fielding”: integrating field-recording, improvisational, electronic music practice, livecoding, and sonic wilderness interventions with generative dynamic systems.

Background Link to heading

Sonic wilderness intervention Link to heading

sonic wilderness interventions 2015 in Kilpisjärvi, Finland

sonic wilderness interventions 2015 in Kilpisjärvi, Finland

When a group of six sound practitioners came together in 2015 to engage in a series of sonic wilderness interventions (SIW) with portable electronic instruments, it soon became clear that the practice of playing outdoors in “sonic wilderness”1 not only creates a feeling of closeness and humbleness, but also provokes questions on performance practice. These easily exceed the borders of SIW and their pondering may contribute to understanding performance practice in a more conventional scope.

From these emerging questions particularly the one about How to integrate the environment into the performance, possibly make it a playing partner? will be addressed within my work around fielding.

Non-human agency and performance practice Link to heading

Anemos Sonore Maaum Siilium, an artificial plant with sonic utterances. (2016, Till Bovermann, Katharina Hauke)

Anemos Sonore Maaum Siilium, an artificial plant with sonic utterances. (2016, Till Bovermann, Katharina Hauke)

Anemos Sonore Maaum Siilium was the first study that addressed the question on integrating the environment into the performance itself. Together with Katharina Hauke, I constructed an artificial plant that senses its surroundings and makes sounds and music informed by what it perceives. Particularly, it has a “root”, leaves and branches by which it feels moisture in the soil (by means of measuring resistance between several probes), wind turbulences (by means of a piezo microphone attached to the metallic branches swaying in the wind).

These sensory readings are directly and immediately translated into sound; the rattling captured by the piezo is filtered; Depending on the circumstances, the plant sings different songs.

Conceptual overview — as for now Link to heading

Layers of interaction Link to heading

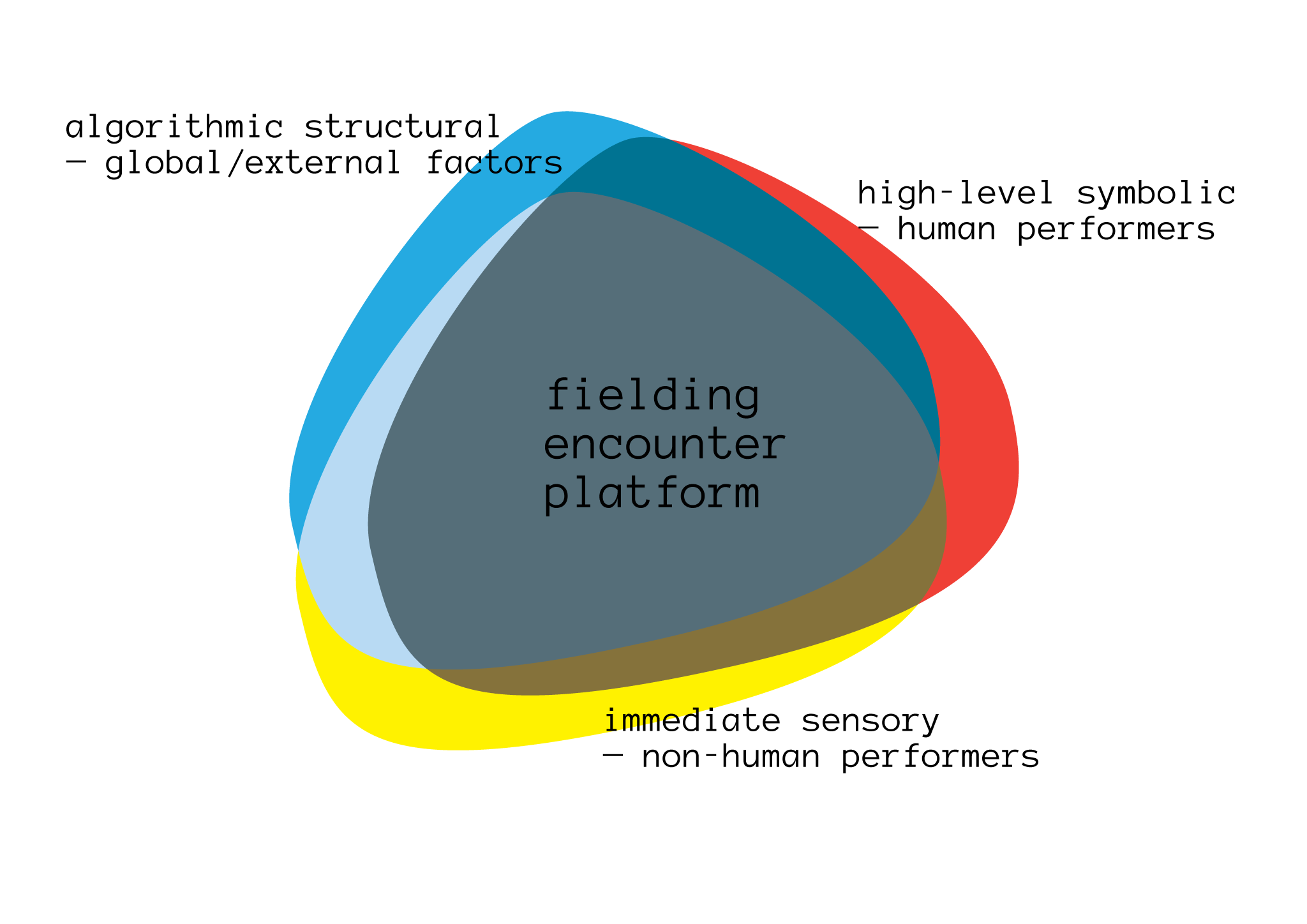

Fielding interacts with its environment in three tightly interlaced layers.

- The condition of the algorithmic structural layer is determined by relatively slowly changing external influences. Those can be for example planet constellations or the sun’s altitude at the performance location. The layer’s influence on the system can be interpreted as a basis of potentiality, spanning the frame for possible soundscapes.

- The immediate sensory layer integrates both short- and long-term states measured locally at the performance site. Factors are e.g. pressure, CO2-level, temperature, humidity, luminosity, overall hue or the sonic qualities of air movements through artificial branches. The layer influences the system by providing movement and state change within the elements that were chosen by the algorithmic structural layer.

- The high-level symbolic layer then allows to combine the chosen elements on a symbolic level. One or more human performers operate on a tree structure, effectively inserting and deleting sonic elements.

For the implementation of this system I plan to facilitate the meta-language steno.

Fielding interacts with the environment on three different layers.

Encounter platforms Link to heading

Fielding consists of two to three semi-autonomous encounter platforms, each linked wirelessly with the others and equipped with loudspeakers and real-time sensors. Unlike in the drawing below, the platforms differ from each other in terms of connected sensors. One of them is connected to the laptop of a human performer.

Fielding consists of two to three semi-autonomous encounter platforms.

Hardware preparations Link to heading

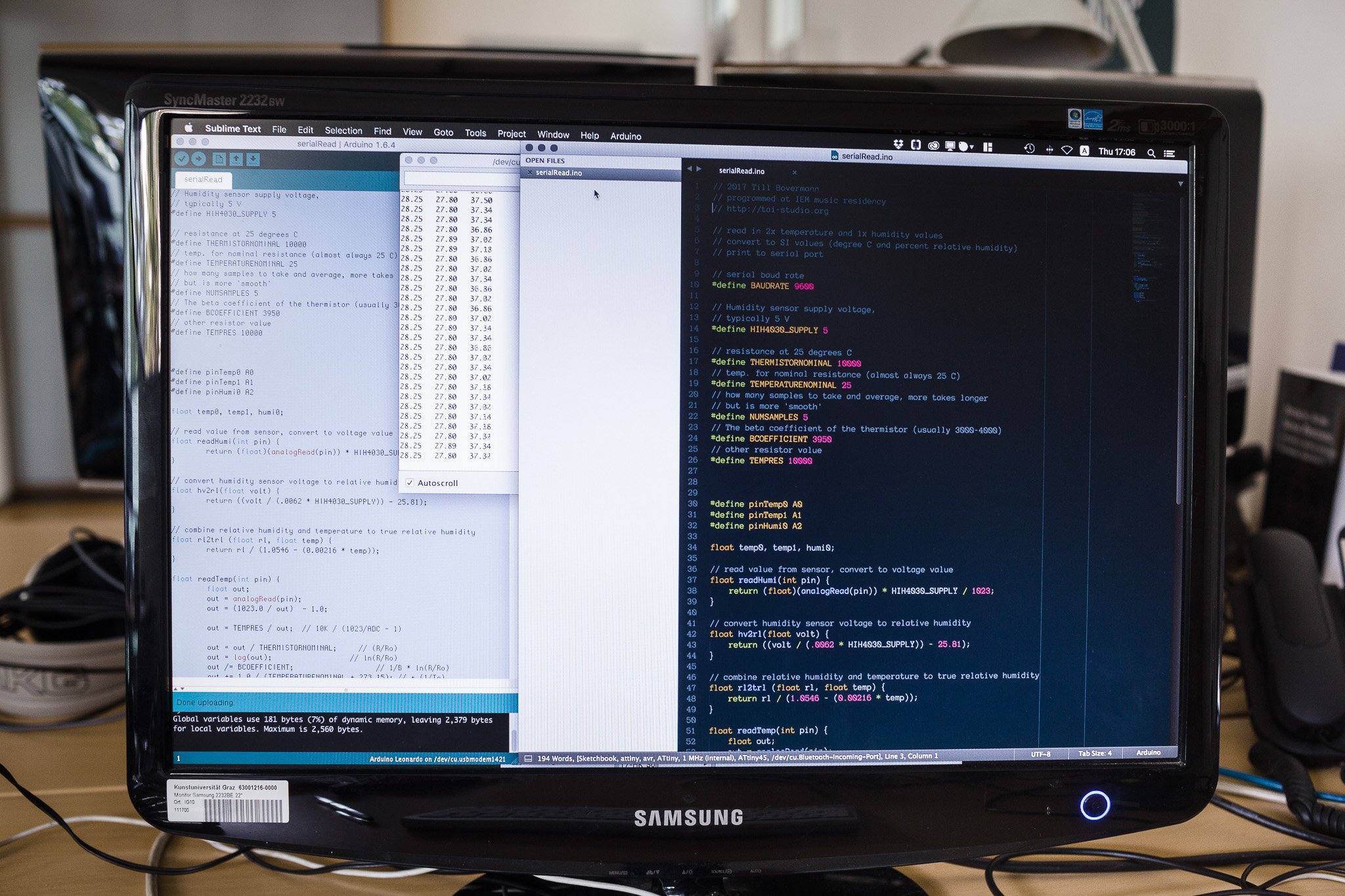

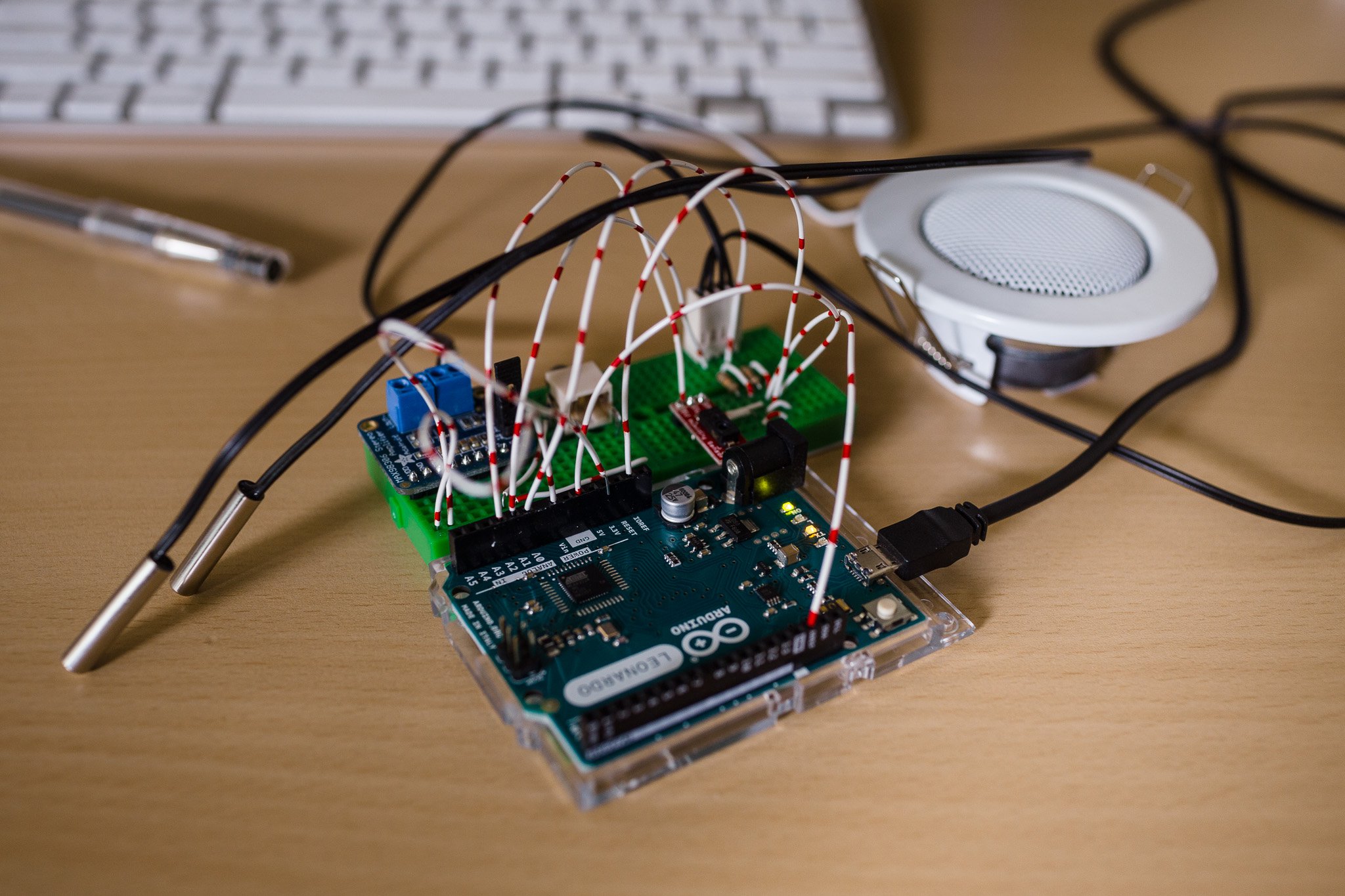

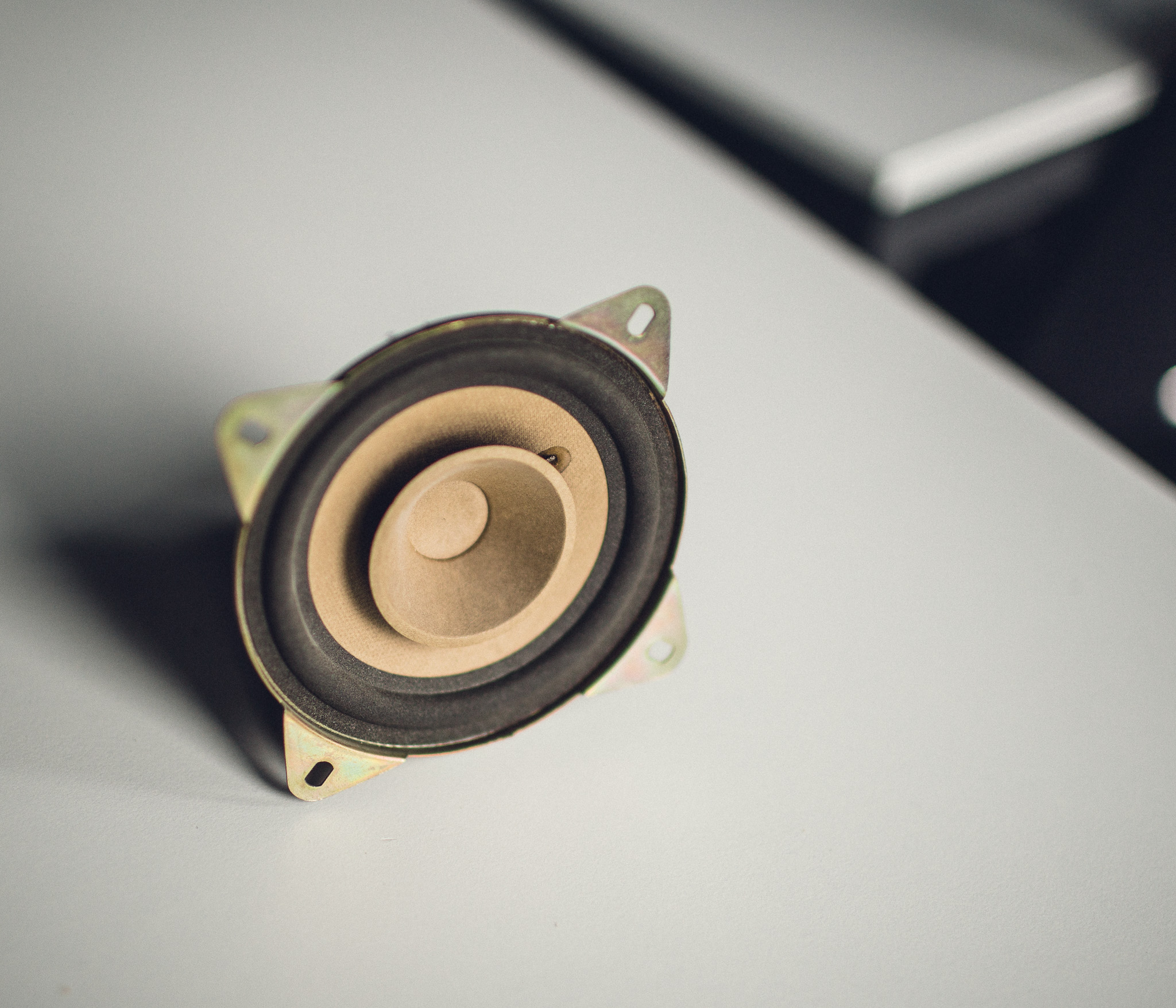

I spent part of the first two weeks at the IEM by collecting and testing parts for an Arduino-based sensor and amplification node. I plan to incorporate it into the first prototype of the laptop-bound encounter platform. Next to two thermistors (one intended to measure air temperature, one for the ground), I included a humidity sensor. I also tested various small and portable speaker chassis. Since the whole system will be powered by a 5V battery and the completed system should be portable, light and weather resistant, I decided to concentrate on working with exponential horn tweeters since they are made of plastic and do not expose moving parts. Fortunately, the IEM offered me three used tweeters (which unfortunately do not feature any model number). Another surprisingly powerful and well-sounding broadband speaker I took into consideration is the Blaupunkt AL100. It fell short of my list of demands because its cone is made of cardboard, which will easily weaken, even in humid environments.

Accessing sensors with Arduino

Sensors and a speaker attached to an Arduino

Reclaimed exponential horn tweeter

Reclaimed broadband speaker Blaupunkt A100

-

A detailed discussion on sonic intervention wilderness appeared as a chapter in the book on instrumentality. I discuss additional details on space and place for SIW in this text. ↩︎