The other day, I was thinking about meta-control, a controlling mechanism I heard first of as modality at Steims 2010 modality team meeting. There, Jeff Carey talked us through his performance setup, consisting of a bunch of software instruments he controls by three hardware interfaces: a joystick, a number pad as used by gamers and an mpc-style pushbutton controller. While he reported that he left the logic and sound rendering of the software instruments more or less fixed over the the 5 years, his performance practice made him search for ways to dynamically change mappings and controlling styles between the soft synths and the hardware interfaces.

In addition to standard mappings like the deflection of a joystick determines a sound’s frequency, he implemented several meta control mechanisms, of which I subsequently describe the two most prominent.

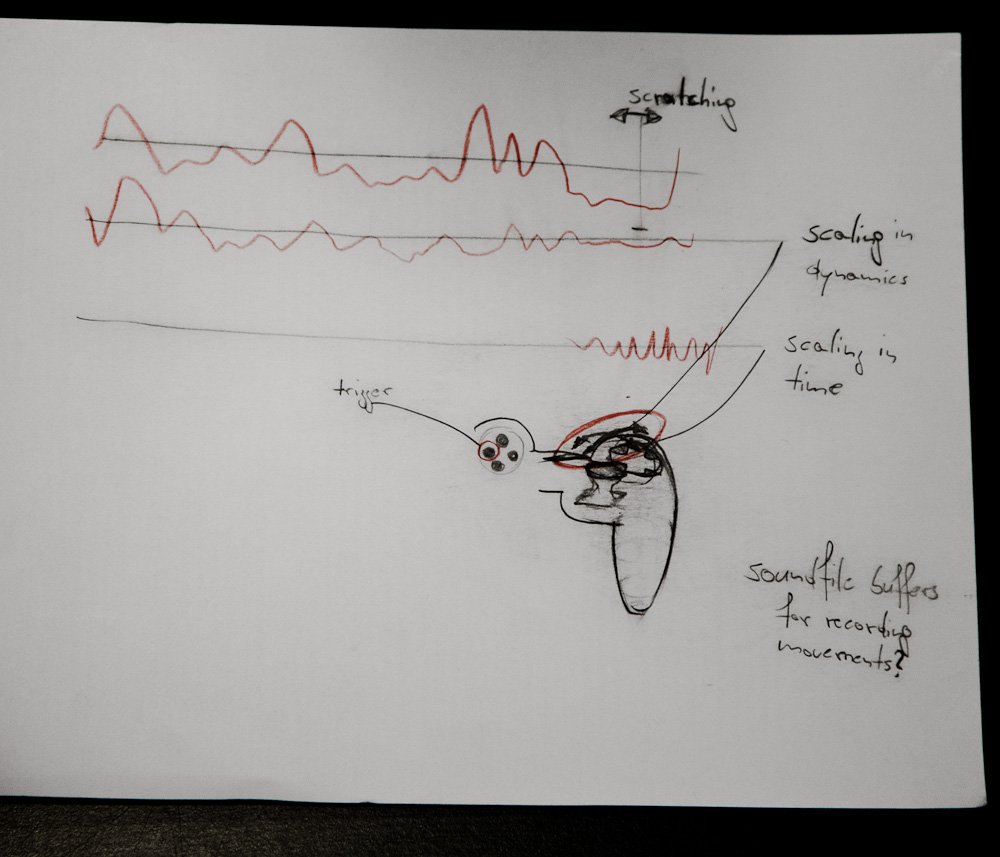

Recording and playback of control data

Dedicated buttons on a controller are used to start/stop the recording of the controller’s no-meta output data. The recorded data can be played back using one or more of the following filter operations:

- Playback control

- One shot trigger: play back once

- Looped: play back repeatedly

- Time control

- Time invert: play back reversed in time

- Time scale: play back time is multiplied by a factor (stretch/shrink in time)

- Time quantisation: value updates are quantised according to a given measure

- Level control

- Level invert: values are negated

- Level scale: values are multiplied by a factor

- Level quantisation: values are quantised according to a given measure

- Level overwrite: output is the maximum/minimum/average/addition/multiplication of the current state of the recorded control

- Re-map: values are spread to other synthesis parameters

The re-map filter leads us to dynamic re-mapping, discussed next.

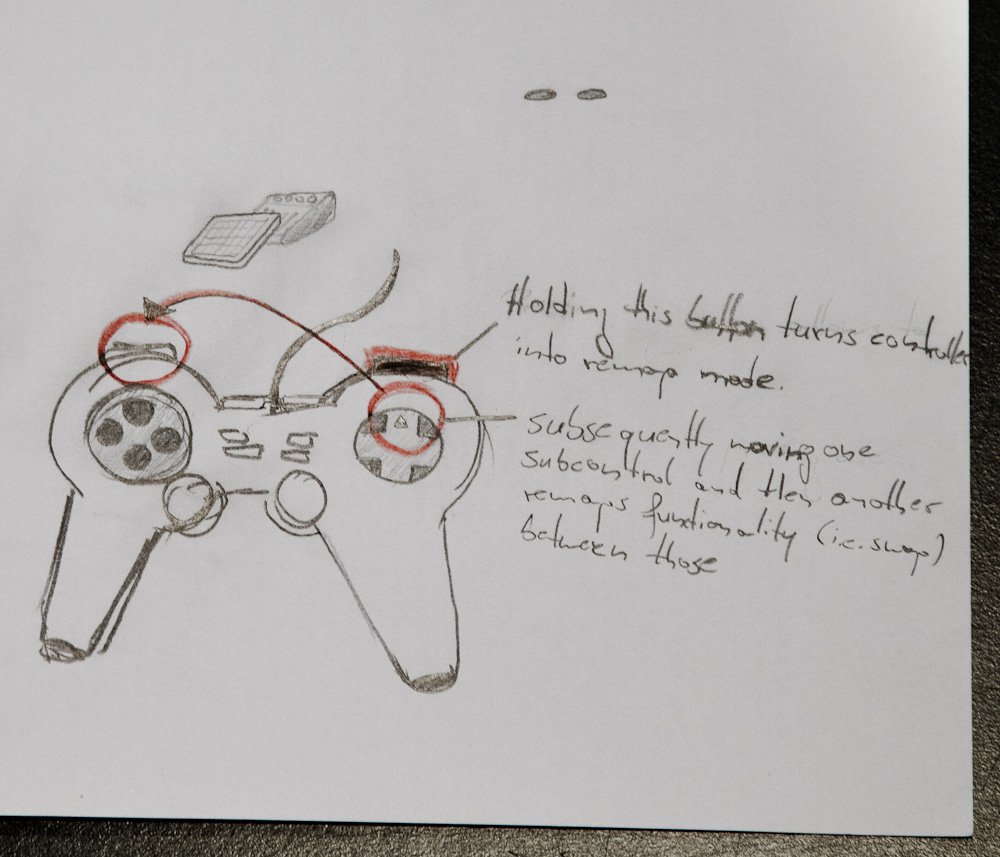

Re-mapping control streams on-the-fly

By holding a dedicated button on the controller, followed by actuating to sub-control modules (e.g. a joystick, a knob or another button), the elements switch their mapping. E.g. it is possible to swap the meaning of a fader move (e.g. controlling a sound’s frequency) with the meaning of a joystick’s deflection (e.g. controlling another sound’s filter frequency).

Questions arising

Although, these two meta control mechanisms are fairly basic and easy to understand, they rise several issues in both practicability and intended behaviour:

Implementing the recording, playback and filtering mechanisms is pretty straight forward. Looking at this meta-control paradigm alone, it is a good candidate to be implemented on a low semantical level leaving out any semantical information about the controller or the synthesiser. However, the sequence in which the proposed playback filters are run through influences the resulting behaviour significantly. For the moment, I cannot see a clear argumentation on which sequence to choose in favour to another. A possibility would be to keep this to forward this decision to the performer.

Questions arising from dynamic re-mapping are mainly concerned with the increasing complexity of such a system. From sliding puzzles, we experience how difficult it is to get back to an intended state. In my opinion, helper mechanisms such as “reset to default”, “re-map to a mapping preset”, “undo”, or even a visual representation of the current state might be necessary.

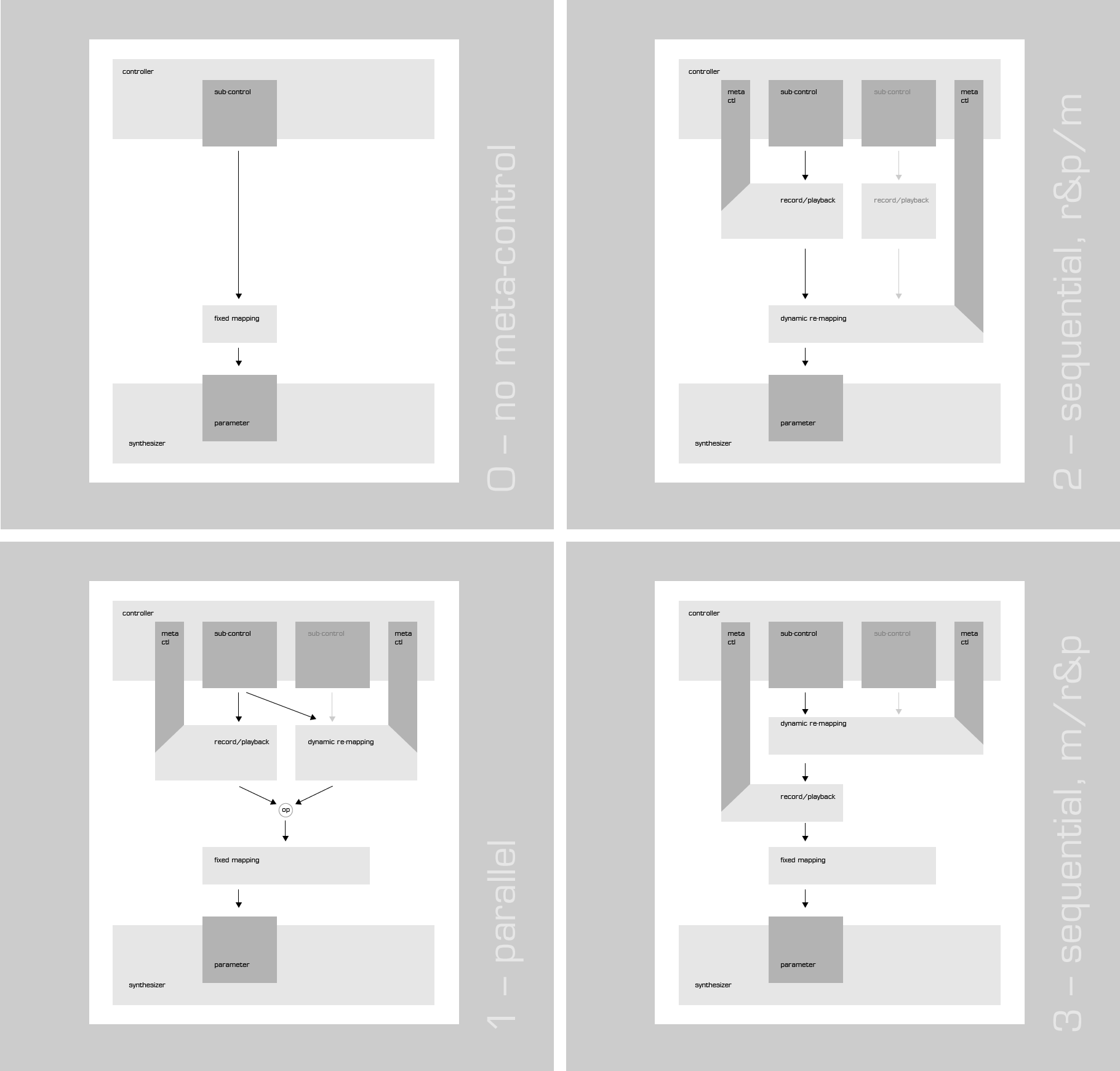

In order to implement both meta-control layers, a decision about the order of filtering has to be made: A parallel implementation (see below figure, part 1) requires to include an additional operator to compute the actual parameter set fort he synthesis process, based on both meta-control chains. IMHO, this decision cannot be determined without serious semantical knowledge about the performance and the system’s intended perceptual output. In a sequenced ordering, there are again two possibilities: By placing the record/playback system in front of the re-mapping means that played back control data would also be re-mapped according to the current mapping state; a functionality I think is not necessarily intended and limits the system’s expressibility. Reversing the order such that re-mapping happens before record/playback, gets rid of this restriction, allowing a low-level implementation based e.g. on mix matrices.